Exploring Parallel Computing using MPI and C++: Part 2 - Basic MPI Programming Concepts

Introduction

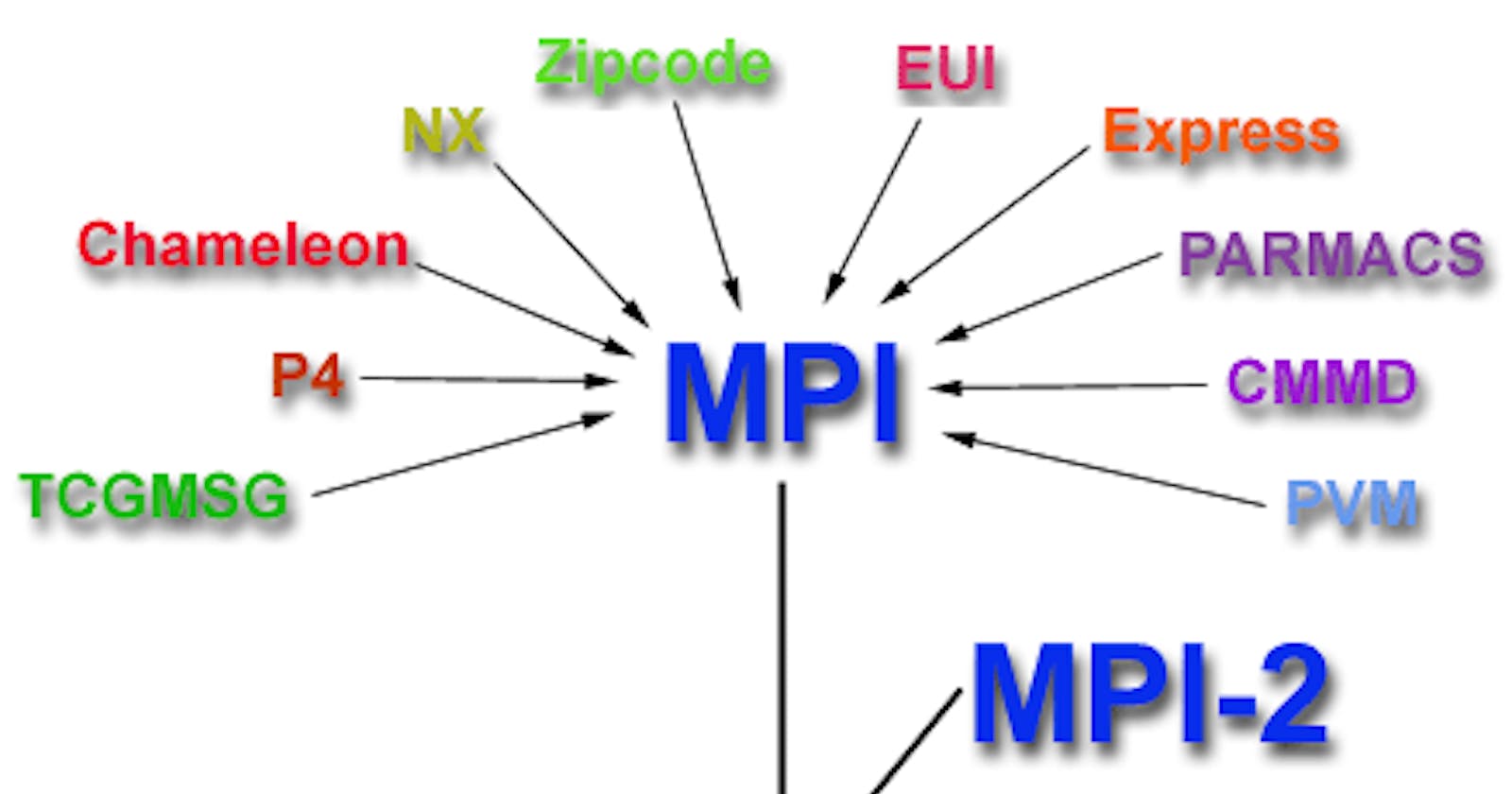

Welcome back to our blog series on parallel computing using MPI and C++. In the previous post, we introduced the fundamentals of parallel computing, MPI, and the importance of parallel computing in today's data-driven world. In this second installment, we will delve into the basics of MPI programming. We will explore the concepts and functions that form the building blocks of MPI programs, empowering you to write your own parallel programs using MPI and C++.

MPI Communication Modes: MPI provides two primary communication modes: point-to-point communication and collective communication.

- Point-to-Point Communication: Point-to-point communication involves sending messages between individual processes. MPI provides functions such as

MPI_SendandMPI_Recvto facilitate message sending and receiving. TheMPI_Sendfunction allows a process to send a message to another process, while theMPI_Recvfunction allows a process to receive a message from another process. These functions require specifying the source and destination process ranks and allow for sending and receiving different data types.

Point-to-point communication can be used for various scenarios, such as exchanging data between neighboring processes in a grid or passing messages in a pipeline-like computation.

- Collective Communication: Collective communication involves communication among a group of processes. MPI provides functions like

MPI_Bcast,MPI_Scatter, andMPI_Gatherto perform collective operations.

MPI_Bcastallows one process to broadcast data to all other processes in a group.MPI_Scatterdistributes data from one process to all other processes in a group. It takes an array and divides it into equal-sized chunks, sending each chunk to a different process.MPI_Gathercollects data from all processes in a group and gathers it into one process. It takes chunks of data from each process and combines them into a single array.

Collective communication is useful when distributing workloads or collecting results from all processes.

MPI Process Topology

In MPI, processes are organized in a specific topology, defining the logical arrangement of processes and their communication pattern. Common topologies include Cartesian topology and the graph topology.

Cartesian Topology: In a Cartesian topology, processes are arranged in a grid-like structure. MPI functions like

MPI_Cart_createandMPI_Cart_shifthelp create and manipulate Cartesian topologies, enabling communication along rows, columns, or diagonals.Graph Topology: Graph topology allows for more general connections between processes. MPI functions like

MPI_Graph_createandMPI_Neighbor_allgatherare used to create and work with graph topologies, where each process can have different degrees of connectivity with other processes.

MPI Data Types

MPI supports various data types to handle different kinds of data efficiently. These data types include basic types (e.g., MPI_INT, MPI_FLOAT), derived types (e.g., MPI_STRUCT), and user-defined types. Using appropriate data types in MPI programs ensures efficient data transfer and correct interpretation of data on the receiving end.

For complex data structures, MPI provides functions like MPI_Type_create_struct and MPI_Type_commit to define and commit user-defined data types.

Error Handling in MPI

Error handling is essential in parallel programs to detect and handle errors gracefully. MPI provides error codes and error handlers to capture and manage errors that may occur during program execution. The MPI_Errhandler_set function is used to specify error handlers and the MPI_Errhandler_get function retrieves the current error handler.

Debugging Parallel Programs

MPI Debugging Interface (MPI-3): MPI-3 introduced a standardized interface for debugging parallel programs. It provides functions like

MPI_T_cvar_handle_allocandMPI_T_cvar_writethat allow programmers to access and manipulate internal MPI variables for debugging purposes. These functions enable developers to gather information about the MPI implementation and runtime behavior, aiding in the identification and resolution of issues.Parallel Debuggers: Parallel debuggers, such as TotalView and DDT (Distributed Debugging Tool), are specifically designed to debug parallel programs. These tools offer features like process visualization, breakpoints, and message tracking, allowing developers to examine the behavior of each process and detect errors or synchronization issues.

Using these debugging tools and techniques can help identify and resolve issues in parallel programs efficiently, ultimately improving the overall reliability and performance of the applications.

Conclusion

In this blog post, we explored the basic concepts of MPI programming. We learned about point-to-point communication and collective communication modes provided by MPI. Additionally, we discussed MPI process topologies, MPI data types, error handling, and debugging techniques for parallel programs. Armed with this knowledge, you are now equipped to start writing your own MPI programs to leverage the power of parallel computing.

In the next part of our series, we will dive deeper into MPI programming by discussing message passing using point-to-point communication and implementing collective operations in MPI. Stay tuned for Part 3, where we will explore more advanced MPI programming concepts! Happy parallel programming! Keep Bussing!!